Test everything: the business case for A/B testing online

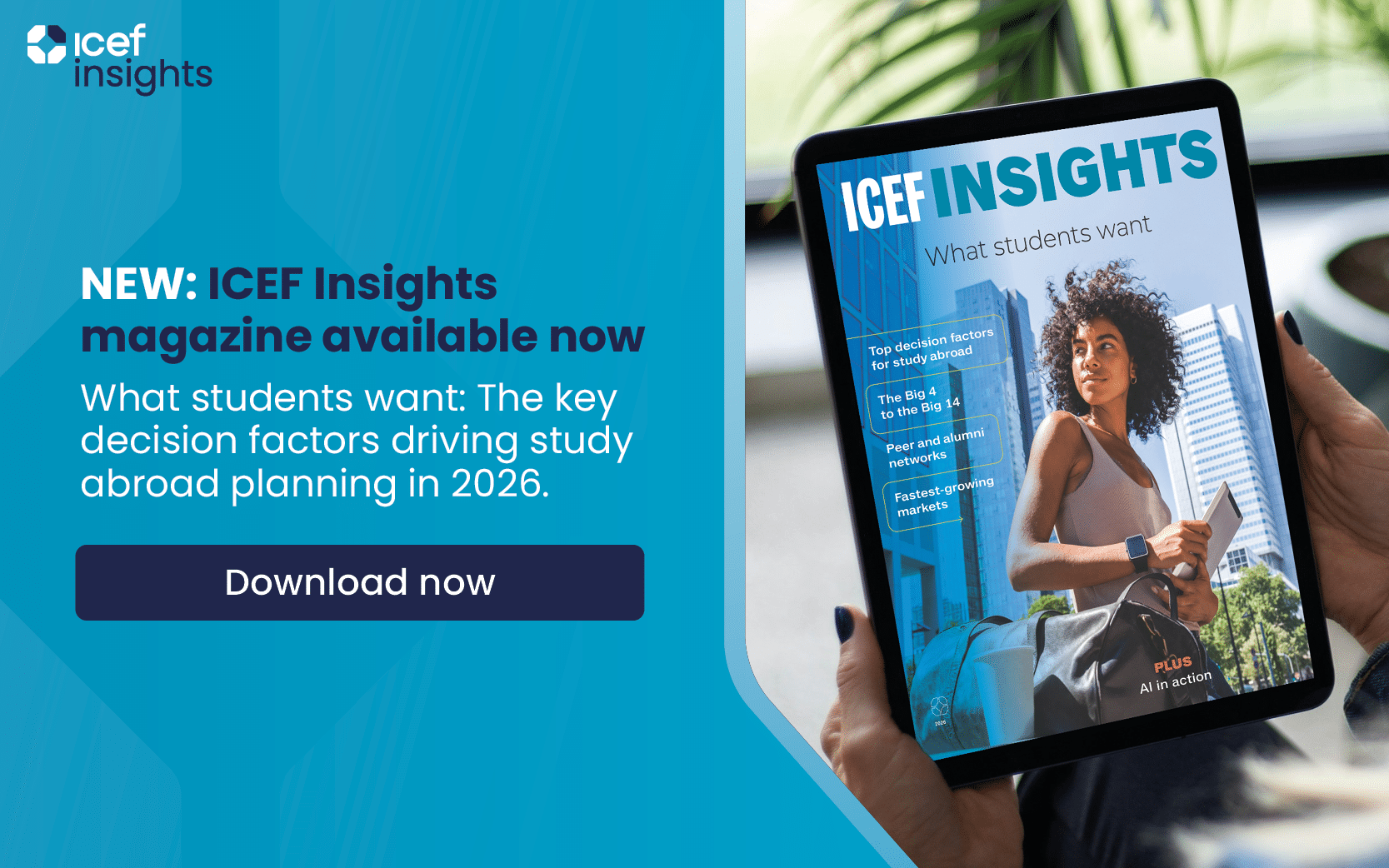

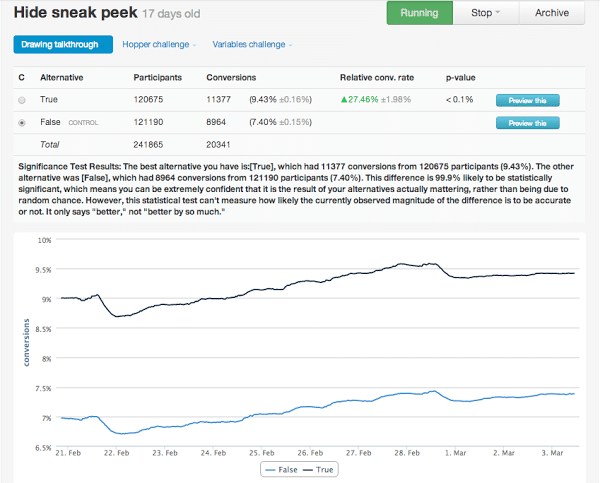

had a question. The non-profit had recently introduced a “sneak peek” feature into some of their online lessons. The feature was meant to encourage students to continue the lesson by giving them a preview of what they would soon learn. However, the user response to the new feature was mixed: some liked it, others seemed confused.

Khan Academy was unsure about how to proceed and so they decided to measure more carefully how the “sneak peek” was working. For one week, they ran a 50/50 test on their website that used two different versions of the same web page. Half of the visitors saw the “sneak peek” feature and the other half didn’t.

After the week, they had a clear picture of how the feature was working based on real user data - through which it was clear (as shown in the graphic below) that the “no sneak peek” version of the page was converting at a rate nearly 30% higher than the version including the “sneak peek”.

“An A/B test involves testing two versions of a web page - an A version (the control) and a B version (the variation) - with live traffic and measuring the effect each version has on your conversion rate. Start an A/B test by identifying a goal for your company then determine which pages on your site contribute to the successful completion of that goal.”

A/B testing is a simple but powerful idea. It is an approach that relies on good data and good processes throughout and that, with those key building blocks in place, can be applied to just about anything you do online. You can test different copy or colours on your homepage or the placement of a key conversion button or the subject line in your email campaign - or, for that matter, pretty much any aspect of your online presence.

Wired magazine describes how A/B testing has become standard practice for some of the world’s leading online brands.

“Over the past decade, the power of A/B testing has become an open secret of high-stakes web development. It’s now the standard (but seldom advertised) means through which Silicon Valley improves its online products.

Using A/B, new ideas can be essentially focus-group tested in real time. Without being told, a fraction of users are diverted to a slightly different version of a given web page and their behaviour compared against the mass of users on the standard site.

If the new version proves superior - gaining more clicks, longer visits, more purchases - it will displace the original; if the new version is inferior, it’s quietly phased out without most users ever seeing it.

A/B allows seemingly subjective questions of design - colour, layout, image selection, text - to become incontrovertible matters of data-driven social science.”

At the recent Travolution Summit in London, Graham Cooke, the CEO of the conversion optimisation firm Qubit, pointed out that, especially in the online space, marketing and IT teams are becoming increasingly integrated.

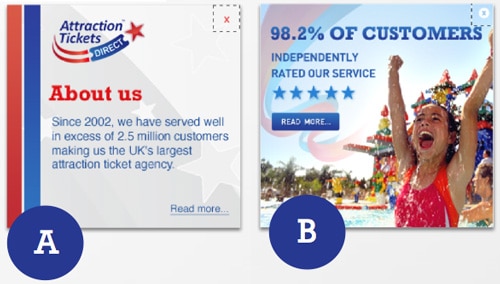

Also at Travolution, Cooke’s co-presenter, Chris Bradshaw of ATD Travel Service, demonstrated another compelling A/B case that compared two versions of an online ad for ATD.

“Let’s say you have a website where you sell house-cleaning services. You offer basic cleaning, deep cleaning, and detailed cleaning. Detailed cleaning is most profitable of the three, so you’re interested in getting more people to purchase this option. Most visitors land on your homepage, so this is the first page that you want to use for testing. For your experiment, you create several new versions of this web page: one with a big red headline for detailed cleaning, one in which you expand on the benefits of detailed cleaning, and one where you put an icon next to the link to purchase detailed cleaning. Once you’ve set up and launched your experiment, a random sample of your visitors see the different pages, including your original home page, and you simply wait to see which page gets the highest percentage of visitors to purchase the detailed cleaning. When you see which page drives the most conversions, you can make that one the live page for all visitors.”

Beyond a free service like Content Experiments, there are also an increasing number of service providers specialising in A/B testing or other aspects of website optimisation, such as Optimizely, Qubit, Unbounce, and many others. The interesting thing about such services is they often allow marketers to set up and run multiple tests without additional development or IT intervention on the websites they are testing, and this opens the door to more frequent and more extensive optimisation efforts. However, putting effective processes in place to manage any such testing is a key step. A recent Marketing Sherpa survey of marketing executives found A/B testing is a powerful window into customer behaviour once solid management processes are in place.

“Overall, 47% of marketers said they use website optimisation and testing to draw conclusions about customers. But when we segment the data, it gets interesting. The more mature the marketing organisation, the more it is able to use A/B testing to learn about customer behaviour… 76% of trial phase marketers - those who do not have a process or guidelines for optimisation or testing - do not use testing to learn about customers and build a customer theory. However, 75% of strategic phase marketers - those who do have a formal process with thorough guidelines routinely performed - use testing to learn about customer behaviour.”

In a recent post on the Harvard Business Review blog, Wyatt Jenkins, a vice president at Shutterstock, sets out some important tips when drawing up your testing processes.

- Build a small testing team. “All you need to perform tests is a 3-4 person team made up of an engineer, a designer/front-end developer, and a business analyst (or product owner).”

- Look at all the available metrics. “The metric you tried to move probably won’t tell the whole story. Plenty of very smart people have been puzzled by A/B test results. You’ll need to look at lots of different metrics to figure out what change really happened.”

- Look at results by customer segment. “Often a test doesn’t perform better on average, but does for particular customer segments, such as new vs. existing customers. The test may also be performing better for a particular geo, language, or even user persona. You won’t find these insights without looking beyond averages by digging into different segments.”

- Think small and fast. “Don’t spend months building a test just to throw it away when it doesn’t work. If you have to spend a long time creating it, then you’re doing it wrong. Find the smallest amount of development you can do to create a test based on your hypothesis; one variable at a time is best.”

- Test again. “Keep a backlog of previously run tests, and try re-running a few later. You might be surprised what you find.”

Mr Jenkins also offers an extended set of tips on his personal blog to build on the best practices in his Harvard Business Review post, and we highly recommend his extended post for further reading on the subject. When we are talking about conversion optimisation and A/B testing, what we are really talking about is creating a culture of experimentation in the marketing effort. A/B testing is not a fix for every business problem and it is especially not a basis for determining major innovations or shifts in strategy. However, conversion optimisation is an important factor in driving results for many marketers - one that is best understood as a process of incremental change and continuous improvement.